Google’s Search Console API provides statistics regarding how users interact with your websites via Google search (keywords, impressions, clicks, etc.)

While Google provides a Web Console, it can be convenient to programmatically access the API, for instance to be forwarded analytics in a daily/weekly/monthly email. The article:

- Introduces the necessary boilerplate to use the Search API (create a project, a specific user, a key for that user, how to allow that user to access stats for a given site);

- Presents how to use the official Go wrapper

to

- list domains;

- access statistics for the most recent day for which there is analytics data;

- and perform a bit of server-side filtering to look for the Google search queries matching a given page.

search-console is a small tool,

wrapping the Go code presented in this article.

Nubra valley in Northern India, Ladakh region by Rajat Tyagi through wikimedia.org – CC-BY-SA-3.0

Boilerplate

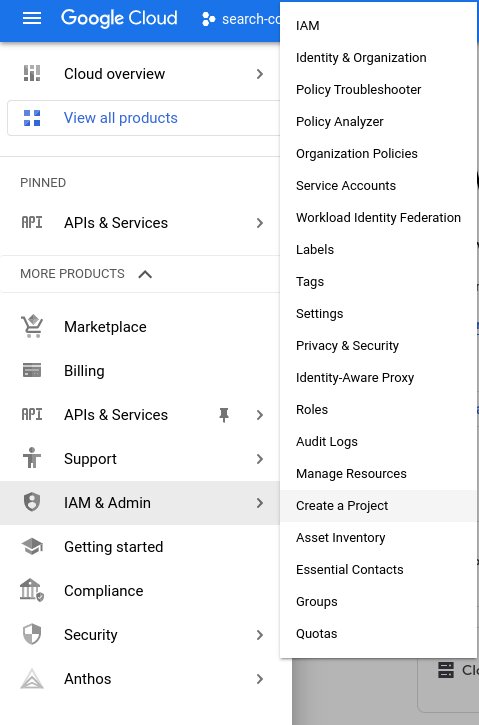

Create a project

Assuming you already have an Google cloud account (a GMail account really), the official procedure is rather straightforward, essentially:

- Go to the Google Cloud Console;

- Click the burger menu,

IAM& AdminthenCreate a Project;

- Fill in the form and click

Create.

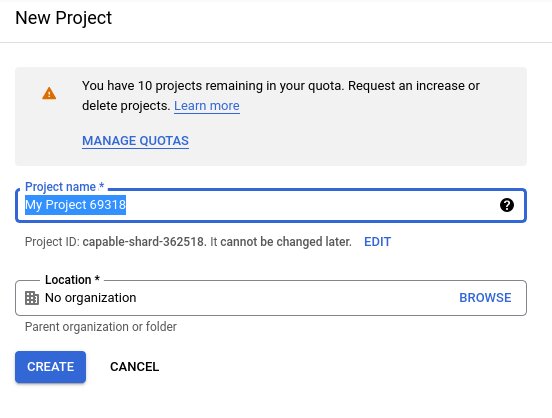

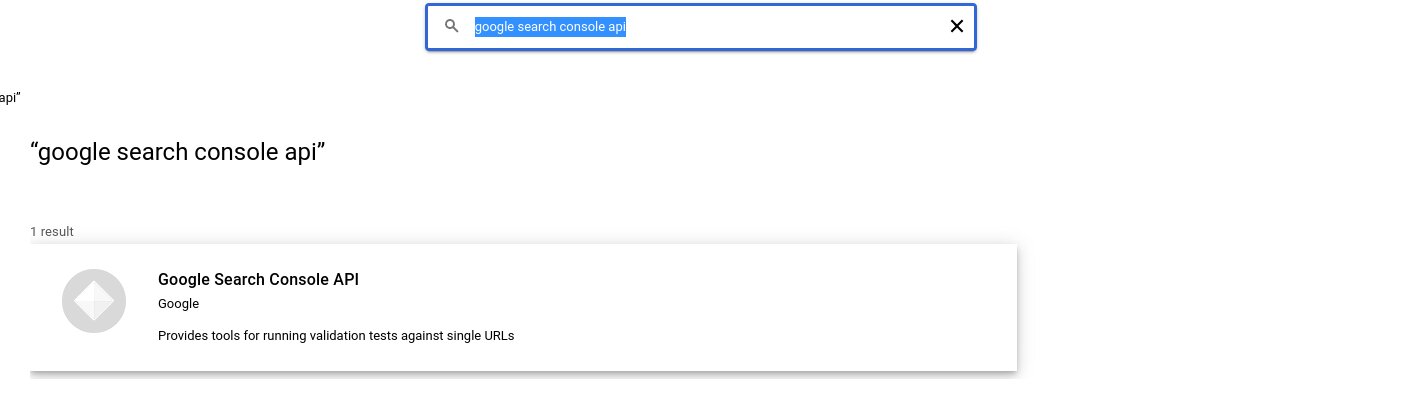

Enable access to the Search Console API for the project

Again, the procedure is quite clear from the official documentation:

- Go to the Google Cloud Console;

- Make sure the previously created project is selected; you can

change project using the list immediately to the right of the

burger menu;

- In the burger menu,

APIs & ServicesthenLibrary;

- From there, search for “

google search console api”;

- There should be a single

Google Search Console APIhit:

- You may click on the result and enable the API.

Create a Service account

There are different types of credentials:

- API key;

- OAuth client ID;

- Service account.

In order to provide a full and automatic access to the API, a Service account is required. By comparison, OAuth requires a human to validate API accesses from a browser, while API key only provides public data access.

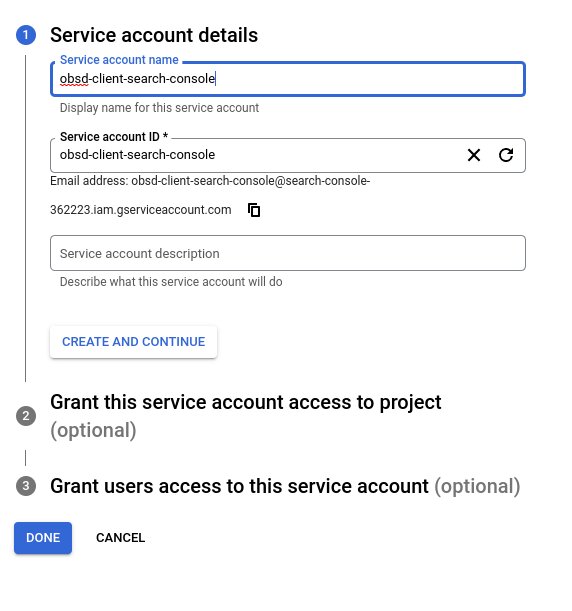

The procedure to create a Service account is as follow:

- There’s a little bit of background regarding Google’s API ways of handling resources access that you may want to familiarize yourself with;

- Go to the Google Cloud Console;

- Click the burger menu,

IAM& AdminthenService accounts;

- Click on

+ Create Service Accountat the top

- Fill in the form;

- We’ll need the account email address later on, so you may want to fetch it now; alternatively, it can be retrieved from the details page of the Service account.

Note: That seems to be all we need to do: you can grant access our Service account to the project, but even without, it seems to still be able to access the API, at least in read-only.

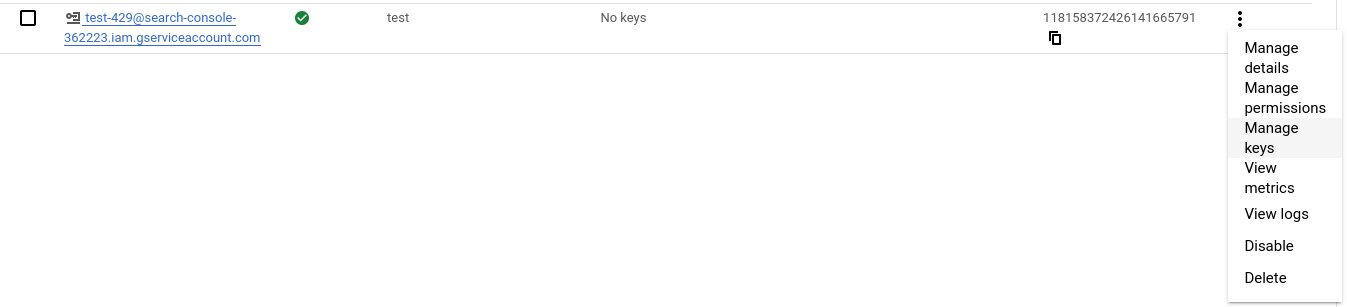

Generate a key for the Service account

As before, we’ll start by going to the Service accounts tab

of the IAM

& Admin entry:

- Go to the cloud console, make sure the correct project is selected;

- Click the burger menu,

IAM& AdminthenService accounts; - On the row corresponding to the newly created Service account,

click the triple dots at the far right, and select

Manage Keys;

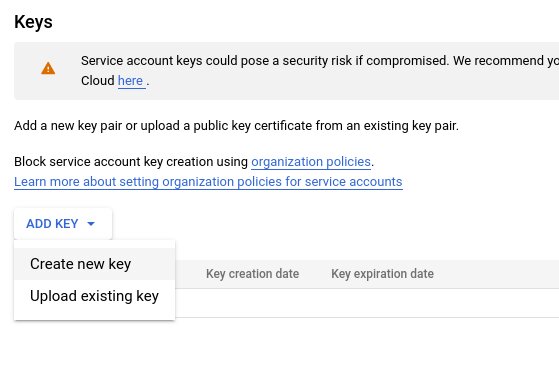

- Click

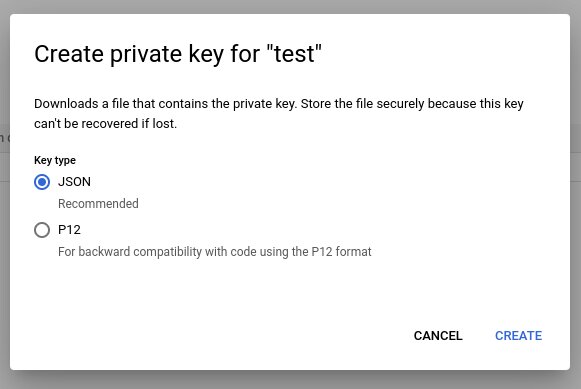

Add key, selectCreate new key;

- Select

JSONandCreate

- Your browser should download a

<something>.jsonfile: this is the key we’ll later need to provide to our Go program to access the API.

Share domain ownership with the (new) Service account

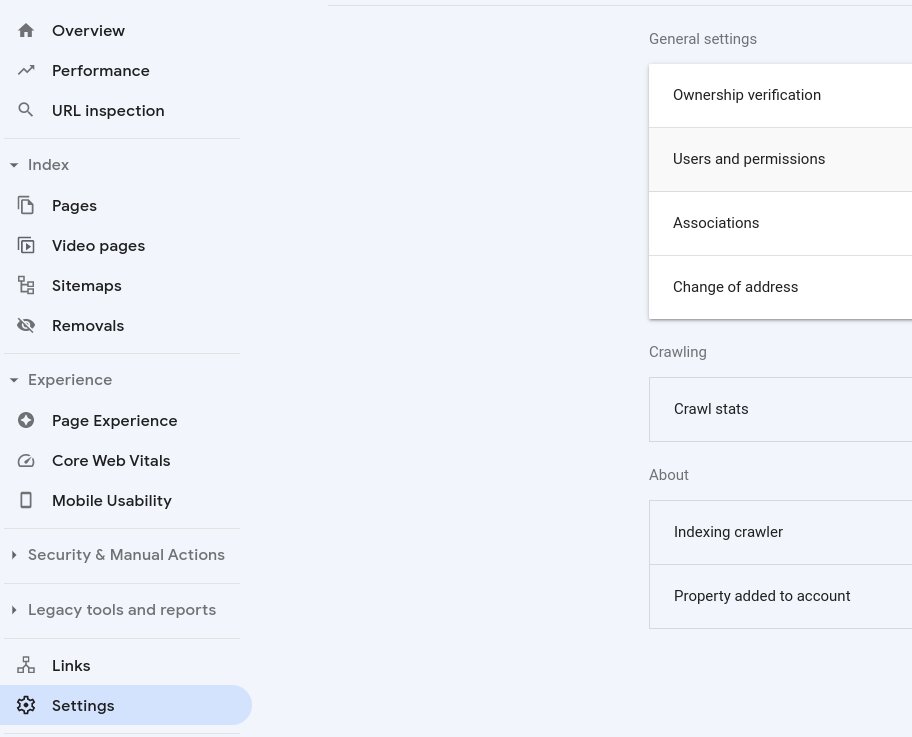

Assuming you’ve already registered domains on the Search Web Console, those won’t automatically be available to the Service account. The procedure to make them available is as follow:

- Going to the Search Web Console;

- Select the (sub)domain of interest;

- In the left menu, scroll-down and select

Settings, thenUser and permissions

- Click

+Add Useron the top left corner;

- Fill in with the email address of the Service account;

it seems for a quick test that

Restrictedpermissions at least allow for read-only access to the API

Note: If you didn’t register domains before, you can do so, either from the Search Web Console, or through some APIs. I haven’t tried the later (yet?), but you may want to look both to:

- the Search API (webmasters-tools), that is the one we’ll be using in a moment;

- or the Site verification API.

Doing so from the Search Web Console is documented here (Add a website property to Search Console); likely, you’ll also want to head here (Verify your site ownership) thereafter for the procedure documenting how Google can verify you do own the domains at issue.

Shyok river (“river of death”) in Northern India, Ladakh region

by

Eatcha

API access

Introduction

Generally speaking, Google’s API seem to either be:

- REST APIs, with JSON encoded input/output over HTTP(s);

- gRPC APIs, with protocol buffers encoded data, again over HTTPs(s).

The searchconsole/v1 provides a free access to one’s website Google search queries. There’s an official Go wrapper avoiding us some common API usage boilerplate (e.g. connection, authentication handling, etc.). It seems to be a REST API.

Note: If you peek through the code, there seems to be a

webmasters/v3 API which is documented in a fairly

similar manner; it seems that webmasters/v3 is a subset of searchconsole/v1.

Note: Some features provided by the API can be performed

without the API; for instance, the following simple GET will inform

Google of a new/updated sitemap:

curl 'https://www.google.com/ping?sitemap=https://example.com/path/to/sitemap.xml'Service

We’ll now explore some aspects of the Go wrapper,

starting with the Service type, returned by the package’s

constructor searchconsole.NewService(). Some of its fields,

such as Sitemaps or Sites are sub-services, on which are

defined methods matching access points provided by the Search API:

type Service struct {

BasePath string // API endpoint base URL

UserAgent string // optional additional User-Agent fragment

Searchanalytics *SearchanalyticsService

Sitemaps *SitemapsService

Sites *SitesService

UrlInspection *UrlInspectionService

UrlTestingTools *UrlTestingToolsService

// contains filtered or unexported fields

}SiteService

For instance, on SiteService (Service.Sites then) is defined,

among others, the List() method, which prepares an API call

SitesListCall, corresponding to the

GET https://www.googleapis.com/webmasters/v3/sites

access, used to list the user’s Search Console sites.

func (r *SitesService) List() *SitesListCall

type SitesListCall struct {

// contains filtered or unexported fields

}Some more methods are again defined on SitesListCall,

among which the Do() method, which executes the API call,

then unmarshalls the resulting JSON in a Go object of type

SitesListResponse:

func (c *SitesListCall) Do(opts ...googleapi.CallOption) (*SitesListResponse, error)

type SitesListResponse struct {

// SiteEntry: Contains permission level information about a Search

// Console site. For more information, see Permissions in Search Console

// (https://support.google.com/webmasters/answer/2451999).

SiteEntry []*WmxSite `json:"siteEntry,omitempty"`

// ServerResponse contains the HTTP response code and headers from the

// server.

googleapi.ServerResponse `json:"-"`

// ForceSendFields is a list of field names (e.g. "SiteEntry") to

// unconditionally include in API requests. By default, fields with

// empty or default values are omitted from API requests. However, any

// non-pointer, non-interface field appearing in ForceSendFields will be

// sent to the server regardless of whether the field is empty or not.

// This may be used to include empty fields in Patch requests.

ForceSendFields []string `json:"-"`

// NullFields is a list of field names (e.g. "SiteEntry") to include in

// API requests with the JSON null value. By default, fields with empty

// values are omitted from API requests. However, any field with an

// empty value appearing in NullFields will be sent to the server as

// null. It is an error if a field in this list has a non-empty value.

// This may be used to include null fields in Patch requests.

NullFields []string `json:"-"`

}The SiteEntry field of type []*WmxSite will be the one

containing the data of main interest to us here:

type WmxSite struct {

// PermissionLevel: The user's permission level for the site.

//

// Possible values:

// "SITE_PERMISSION_LEVEL_UNSPECIFIED"

// "SITE_OWNER" - Owner has complete access to the site.

// "SITE_FULL_USER" - Full users can access all data, and perform most

// of the operations.

// "SITE_RESTRICTED_USER" - Restricted users can access most of the

// data, and perform some operations.

// "SITE_UNVERIFIED_USER" - Unverified user has no access to site's

// data.

PermissionLevel string `json:"permissionLevel,omitempty"`

// SiteUrl: The URL of the site.

SiteUrl string `json:"siteUrl,omitempty"`

// ServerResponse contains the HTTP response code and headers from the

// server.

googleapi.ServerResponse `json:"-"`

// ForceSendFields is a list of field names (e.g. "PermissionLevel") to

// unconditionally include in API requests. By default, fields with

// empty or default values are omitted from API requests. However, any

// non-pointer, non-interface field appearing in ForceSendFields will be

// sent to the server regardless of whether the field is empty or not.

// This may be used to include empty fields in Patch requests.

ForceSendFields []string `json:"-"`

// NullFields is a list of field names (e.g. "PermissionLevel") to

// include in API requests with the JSON null value. By default, fields

// with empty values are omitted from API requests. However, any field

// with an empty value appearing in NullFields will be sent to the

// server as null. It is an error if a field in this list has a

// non-empty value. This may be used to include null fields in Patch

// requests.

NullFields []string `json:"-"`

}Test application: listing domains

With all that was said, we can now write a little bit of code to access and print the sites available:

package main

import (

"log"

"fmt"

"context"

"google.golang.org/api/searchconsole/v1"

)

func main() {

// Will rely on e.g. environ's GOOGLE_APPLICATION_CREDENTIALS

// to find the credential file

scs, err := searchconsole.NewService(context.Background())

if err != nil {

log.Fatal(err)

}

slr, err := scs.Sites.List().Do()

if err != nil {

log.Fatal(err)

}

for _, e := range slr.SiteEntry {

fmt.Println(e.SiteUrl)

}

}

Note: You will need to set the environment variable

GOOGLE_APPLICATION_CREDENTIALS to point to the previously downloaded

key JSON file to run the code.

Accessing other routes require the same approach:

- Identify the main subservice of the

Servicetype; - Look for a method matching what we’re trying to do;

- Look for the definition of the corresponding return type so as to retrieve the desired data.

This specific API being rather small, it’s rather easy to find one’s way.

Daily data access

The API’s documentation informs us that the analytics data are

accessible via searchanalytics/query,

mapped in to Go wrapper to Service.Searchanalytics.Query()

(where Searchanalytics is of type SearchanalyticsService):

func (r *SearchanalyticsService) Query(siteUrl string, searchanalyticsqueryrequest *SearchAnalyticsQueryRequest) *SearchanalyticsQueryCallWe can see that the query parameter is encoded by the

SearchAnalyticsQueryRequest struct:

type SearchAnalyticsQueryRequest struct {

// AggregationType: [Optional; Default is \"auto\"] How data is

// aggregated. If aggregated by property, all data for the same property

// is aggregated; if aggregated by page, all data is aggregated by

// canonical URI. If you filter or group by page, choose AUTO; otherwise

// you can aggregate either by property or by page, depending on how you

// want your data calculated; see the help documentation to learn how

// data is calculated differently by site versus by page. **Note:** If

// you group or filter by page, you cannot aggregate by property. If you

// specify any value other than AUTO, the aggregation type in the result

// will match the requested type, or if you request an invalid type, you

// will get an error. The API will never change your aggregation type if

// the requested type is invalid.

//

// Possible values:

// "AUTO"

// "BY_PROPERTY"

// "BY_PAGE"

AggregationType string `json:"aggregationType,omitempty"`

// DataState: The data state to be fetched, can be full or all, the

// latter including full and partial data.

//

// Possible values:

// "DATA_STATE_UNSPECIFIED" - Default value, should not be used.

// "FINAL" - Include full final data only, without partial.

// "ALL" - Include all data, full and partial.

DataState string `json:"dataState,omitempty"`

// DimensionFilterGroups: [Optional] Zero or more filters to apply to

// the dimension grouping values; for example, 'query contains \"buy\"'

// to see only data where the query string contains the substring

// \"buy\" (not case-sensitive). You can filter by a dimension without

// grouping by it.

DimensionFilterGroups []*ApiDimensionFilterGroup `json:"dimensionFilterGroups,omitempty"`

// Dimensions: [Optional] Zero or more dimensions to group results by.

// Dimensions are the group-by values in the Search Analytics page.

// Dimensions are combined to create a unique row key for each row.

// Results are grouped in the order that you supply these dimensions.

//

// Possible values:

// "DATE"

// "QUERY"

// "PAGE"

// "COUNTRY"

// "DEVICE"

// "SEARCH_APPEARANCE"

Dimensions []string `json:"dimensions,omitempty"`

// EndDate: [Required] End date of the requested date range, in

// YYYY-MM-DD format, in PST (UTC - 8:00). Must be greater than or equal

// to the start date. This value is included in the range.

EndDate string `json:"endDate,omitempty"`

// RowLimit: [Optional; Default is 1000] The maximum number of rows to

// return. Must be a number from 1 to 25,000 (inclusive).

RowLimit int64 `json:"rowLimit,omitempty"`

// SearchType: [Optional; Default is \"web\"] The search type to filter

// for.

//

// Possible values:

// "WEB"

// "IMAGE"

// "VIDEO"

// "NEWS" - News tab in search.

// "DISCOVER" - Discover.

// "GOOGLE_NEWS" - Google News (news.google.com or mobile app).

SearchType string `json:"searchType,omitempty"`

// StartDate: [Required] Start date of the requested date range, in

// YYYY-MM-DD format, in PST time (UTC - 8:00). Must be less than or

// equal to the end date. This value is included in the range.

StartDate string `json:"startDate,omitempty"`

// StartRow: [Optional; Default is 0] Zero-based index of the first row

// in the response. Must be a non-negative number.

StartRow int64 `json:"startRow,omitempty"`

// Type: Optional. [Optional; Default is \"web\"] Type of report: search

// type, or either Discover or Gnews.

//

// Possible values:

// "WEB"

// "IMAGE"

// "VIDEO"

// "NEWS" - News tab in search.

// "DISCOVER" - Discover.

// "GOOGLE_NEWS" - Google News (news.google.com or mobile app).

Type string `json:"type,omitempty"`

// ForceSendFields is a list of field names (e.g. "AggregationType") to

// unconditionally include in API requests. By default, fields with

// empty or default values are omitted from API requests. However, any

// non-pointer, non-interface field appearing in ForceSendFields will be

// sent to the server regardless of whether the field is empty or not.

// This may be used to include empty fields in Patch requests.

ForceSendFields []string `json:"-"`

// NullFields is a list of field names (e.g. "AggregationType") to

// include in API requests with the JSON null value. By default, fields

// with empty values are omitted from API requests. However, any field

// with an empty value appearing in NullFields will be sent to the

// server as null. It is an error if a field in this list has a

// non-empty value. This may be used to include null fields in Patch

// requests.

NullFields []string `json:"-"`

}The following piece of code:

- Queries the stats from ten days ago to today; we ask one

row of data per value of the couple

(page, date), so as to be able to compute per-day statistics on the time interval; - In the resulting rows, we look for the most recent date; where we had clicks

- We then display the statistics only for this date on

stdout.

Note: If you’ve used the Web Console, you may have observed

that there’s around 2 days of delay between the current date and the

last date for which stats are available, and that that recent data is

flagged as “Fresh data - usually replaced with final

data within a few day”. By default, this data is not returned, but

we can access it by setting the DataState parameter to ALL.

It seems that using the last days for which we had Clicks is a good

enough approximation to get similar numbers as the ones displayed

by the Web Console.

package main

import (

"log"

"fmt"

"time"

"os"

"path"

"context"

"google.golang.org/api/searchconsole/v1"

)

// YYYY-MM-DD format for time.Parse()/.Format()

var YYYYMMDD = "2006-01-02"

// last day for which we had clicks

func getLastDay(xs []*searchconsole.ApiDataRow) (string, error) {

n := time.Now().UTC().AddDate(-20, 0, 0)

for _, x := range xs {

if x.Clicks == 0 {

continue

}

if len(x.Keys) == 0 {

return "", fmt.Errorf("Empty Keys")

}

d, err := time.Parse(YYYYMMDD , x.Keys[0])

if err != nil {

return "", fmt.Errorf("First Keys element is not an YYYY-MM-DD: %s", x.Keys[0])

}

if d.After(n) {

n = d

}

}

return n.Format(YYYYMMDD), nil

}

func printHeader(xs []*searchconsole.ApiDataRow, last string) {

nc := 0

ni := 0

for _, x := range xs {

// NOTE: always there, cf. getLastDay()

if x.Keys[0] != last {

continue

}

nc += int(x.Clicks)

ni += int(x.Impressions)

}

// NOTE: averaging the x.Ctr yields a result discordant

// with what's displayed on the Web Console; likely

// an accumulation of rounding errors. This is much closer:

ct := float64(nc)/float64(ni)

fmt.Printf("----------------------------------\n")

fmt.Printf("%-10s %-10s %-5s %s\n", "Clicks", "Impr.", "Ctr.", "Pages")

fmt.Printf("%-10d %-10d %-5.2f %s\n", nc, ni, ct*100, "Total")

fmt.Printf("----------------------------------\n")

}

func queryLastAnalytics(scs *searchconsole.Service, s string) error {

n := time.Now().UTC()

// 10 days ago should be good enough; stats are usually

// updated in less than a day, around 2d before today

args := searchconsole.SearchAnalyticsQueryRequest{

Dimensions : []string{"DATE", "PAGE"},

StartDate : n.AddDate(0, 0, -10).Format(YYYYMMDD),

EndDate : n.Format(YYYYMMDD),

DataState : "ALL",

}

saqr, err := scs.Searchanalytics.Query(s, &args).Do()

if err != nil {

return err

}

// shortcut

xs := saqr.Rows

last, err := getLastDay(xs)

if err != nil {

return err

}

fmt.Printf("----------------------------------\n")

fmt.Printf("Last day: %s\n", last)

printHeader(xs, last)

for _, x := range xs {

// NOTE: always there, cf. getLastDay()

if x.Keys[0] != last {

continue

}

fmt.Printf("%-10d %-10d %-5.2f %s\n", int(x.Clicks), int(x.Impressions), x.Ctr*100, x.Keys[1])

}

return nil

}

func main() {

// Will rely on e.g. environ's GOOGLE_APPLICATION_CREDENTIALS

// to find the credential file

scs, err := searchconsole.NewService(context.Background())

if err != nil {

log.Fatal(err)

}

if len(os.Args) < 2 {

log.Fatalf("%s <site>", path.Base(os.Args[0]))

}

if queryLastAnalytics(scs, os.Args[1]); err != nil {

log.Fatal(err)

}

}

Filtering / Google queries for a specific page

The search console provides access to Google search queries. Despite being, as far as I can tell, incomplete (e.g. numbers of clicks don’t add up), it’s still a good way to get an idea of what triggers Google to display your page.

We could query broadly and do the filtering “by hand”, but because of the limited number of returned rows, this is better delegated to the API.

We’ll use the same Query function as before, with its

SearchAnalyticsQueryRequest parameters struct, but

this time, we’ll be querying for the dimension QUERY, containing

Google search queries. The subfields DimensionFilterGroups

of type ApiDimensionFilterGroup allows us to filter returned

rows, so as to retrieve only those corresponding to a given page.

type ApiDimensionFilterGroup struct {

Filters []*ApiDimensionFilter `json:"filters,omitempty"`

GroupType string `json:"groupType,omitempty"`

// ForceSendFields is a list of field names (e.g. "Filters") to

// unconditionally include in API requests. By default, fields with

// empty or default values are omitted from API requests. However, any

// non-pointer, non-interface field appearing in ForceSendFields will be

// sent to the server regardless of whether the field is empty or not.

// This may be used to include empty fields in Patch requests.

ForceSendFields []string `json:"-"`

// NullFields is a list of field names (e.g. "Filters") to include in

// API requests with the JSON null value. By default, fields with empty

// values are omitted from API requests. However, any field with an

// empty value appearing in NullFields will be sent to the server as

// null. It is an error if a field in this list has a non-empty value.

// This may be used to include null fields in Patch requests.

NullFields []string `json:"-"`

}

/* Other main type of interest to us: */

type ApiDimensionFilter struct {

Dimension string `json:"dimension,omitempty"`

Expression string `json:"expression,omitempty"`

Operator string `json:"operator,omitempty"`

// ForceSendFields is a list of field names (e.g. "Dimension") to

// unconditionally include in API requests. By default, fields with

// empty or default values are omitted from API requests. However, any

// non-pointer, non-interface field appearing in ForceSendFields will be

// sent to the server regardless of whether the field is empty or not.

// This may be used to include empty fields in Patch requests.

ForceSendFields []string `json:"-"`

// NullFields is a list of field names (e.g. "Dimension") to include in

// API requests with the JSON null value. By default, fields with empty

// values are omitted from API requests. However, any field with an

// empty value appearing in NullFields will be sent to the server as

// null. It is an error if a field in this list has a non-empty value.

// This may be used to include null fields in Patch requests.

NullFields []string `json:"-"`

}Then, it’s just a matter of building a filter to target a specific page.

Note that fortunately, the API allows filtering on a non-queried dimension

(we’re filtering on PAGE but only ask for QUERY).

package main

import (

"log"

"fmt"

"time"

"os"

"path"

"context"

"strings"

"google.golang.org/api/searchconsole/v1"

)

// YYYY-MM-DD format for time.Parse()/.Format()

var YYYYMMDD = "2006-01-02"

func printHeader(xs []*searchconsole.ApiDataRow, last string) {

nc := 0

ni := 0

for _, x := range xs {

// NOTE: always there, cf. getLastDay()

if x.Keys[0] != last {

continue

}

nc += int(x.Clicks)

ni += int(x.Impressions)

}

// NOTE: averaging the x.Ctr yields a result discordant

// with what's displayed on the Web Console; likely

// an accumulation of rounding errors. This is much closer:

ct := float64(nc)/float64(ni)

fmt.Printf("----------------------------------\n")

fmt.Printf("%-10s %-10s %-5s %s\n", "Clicks", "Impr.", "Ctr.", "Keywords")

fmt.Printf("%-10d %-10d %-5.2f %s\n", nc, ni, ct*100, "Total")

fmt.Printf("----------------------------------\n")

}

func queryKeywordsFull(scs *searchconsole.Service, s, p string) error {

n := time.Now().UTC()

// Flexible input

s = strings.TrimRight(s, "/")

if p == "" || p[0] != '/' {

p = "/" + p

}

args := searchconsole.SearchAnalyticsQueryRequest{

Dimensions : []string{"QUERY"},

DimensionFilterGroups : []*searchconsole.ApiDimensionFilterGroup{

&searchconsole.ApiDimensionFilterGroup{

Filters : []*searchconsole.ApiDimensionFilter{

&searchconsole.ApiDimensionFilter {

Dimension : "page",

Operator : "equals",

Expression : s+p,

},

},

},

},

// 20 *years* ago

StartDate : n.AddDate(-20, 0, 0).Format(YYYYMMDD),

EndDate : n.Format(YYYYMMDD),

DataState : "ALL",

}

saqr, err := scs.Searchanalytics.Query(s, &args).Do()

if err != nil {

return err

}

xs := saqr.Rows

fmt.Printf("----------------------------------\n")

fmt.Printf("Page: %s\n", p)

printHeader(xs, "")

for _, x := range xs {

fmt.Printf(

"%-10d %-10d %-5.2f %s\n",

int(x.Clicks),

int(x.Impressions),

x.Ctr*100,

x.Keys[0],

)

}

return nil

}

func main() {

// Will rely on e.g. environ's GOOGLE_APPLICATION_CREDENTIALS

// to find the credential file

scs, err := searchconsole.NewService(context.Background())

if err != nil {

log.Fatal(err)

}

if len(os.Args) < 3 {

log.Fatalf("%s <site> <page>", path.Base(os.Args[0]))

}

if queryKeywordsFull(scs, os.Args[1], os.Args[2]); err != nil {

log.Fatal(err)

}

}

Limitations

Most of the previous code is wrapped in a small little tool,

search-console. It meets my current

basic needs, but could be extended in a few ways, and so is this

article. For instance:

-

Currently, domains ownership must be manually shared between the main user (the one corresponding to your Google account) and the Service account via the Web Search Console interface. Likely, this can be automated.

-

Similarly, there should be ways (APIs) to automate the creation of the Service account, e.g. the IAM API.

-

Permissions for the Service account may be too coarse; it’s surprising than even with no access to the project, the Service account can still access the data; likely, we may not even need to grant access to the Search API to the project?

Android DNS issues

You can blissfully cross-compile search-console

to run on android:

$ GOOS=android GOARCH=arm64 go build -o /tmp/search-console search-console.go

# You'll want to install those e.g. in termux's $HOME somehow;

# having a sh(1) wrapper to wrap common options can be convenient

# too

$ adb push /tmp/search-console /storage/XXXX-XXXX/

$ adb push $HOME/.search-console.json /storage/XXXX-XXXX/From there, you can run it via termux. However, DNS queries will fail; this is a known issue, and there are a few workarounds, including the following, presented in one of the previous github issue:

import (

...

"runtime"

"net"

...

)

...

if runtime.GOOS == "android" {

var dialer net.Dialer

net.DefaultResolver = &net.Resolver{

PreferGo: false,

Dial: func(context context.Context, _, _ string) (net.Conn, error) {

conn, err := dialer.DialContext(context, "udp", "1.1.1.1:53")

if err != nil {

return nil, err

}

return conn, nil

},

}

}

...

View of Leh from the Khardung Pass, in Northern India, Ladakh region

by

Prajakta Kailas Jadhav Publishing Pictures

Comments

By email, at mathieu.bivert chez: