In the old days, the painting process was often unrefined Cennino Cennini’s Il libro dell’arte is an example of accumulation of techniques aiming at specific subjects’ depiction. Those processes have progressively been abstracted and some some elements of science, such as its understanding of light, have been assimilated.

To the point that nowadays, we can take a shortcut and talk about an axiomatisation of the painting process: there are small sets of rules, axioms, that can be combined at will to help create a wide variety of artwork. This is even closer to be true for digital art.

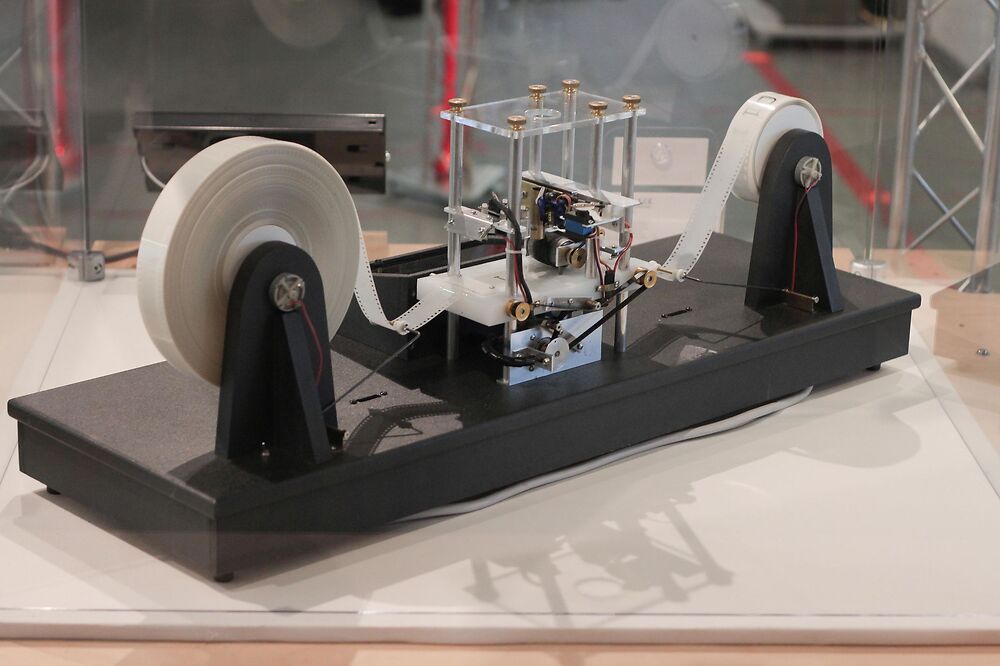

Turing Machine, reconstructed by Mike Davey as seen at Go Ask ALICE at Harvard University

by

Rocky Acosta

A shape based painting process

This “axiomatisation” can vary from author to author, but generally involves representing a picture as a collection of shapes of a given color that for the sake of clarity we will define in in terms of HSV: hue, saturation and value. Those shapes connected by a variety of edges, that can be more or less sharp.

Note: This is a “gross”, practical pixelization, through variable-size “pixels”. A key aspect for a painter is to find a few shapes that can encode a lot of (visual) information, while designing such shapes to be rhythmically harmonious. This is blatant for landscape “value” (monochromatic) studies.

Note: The selection of a limited palette, that is, restricting the amount of colors we can express, can help us achieve a certain mood and uniformity. We can already see it in the previous landscapes thumbnails. In the programming realm, we find similar limitations here and there, such as NASA’s The Power of 10: Rules for Developing Safety-Critical Code.

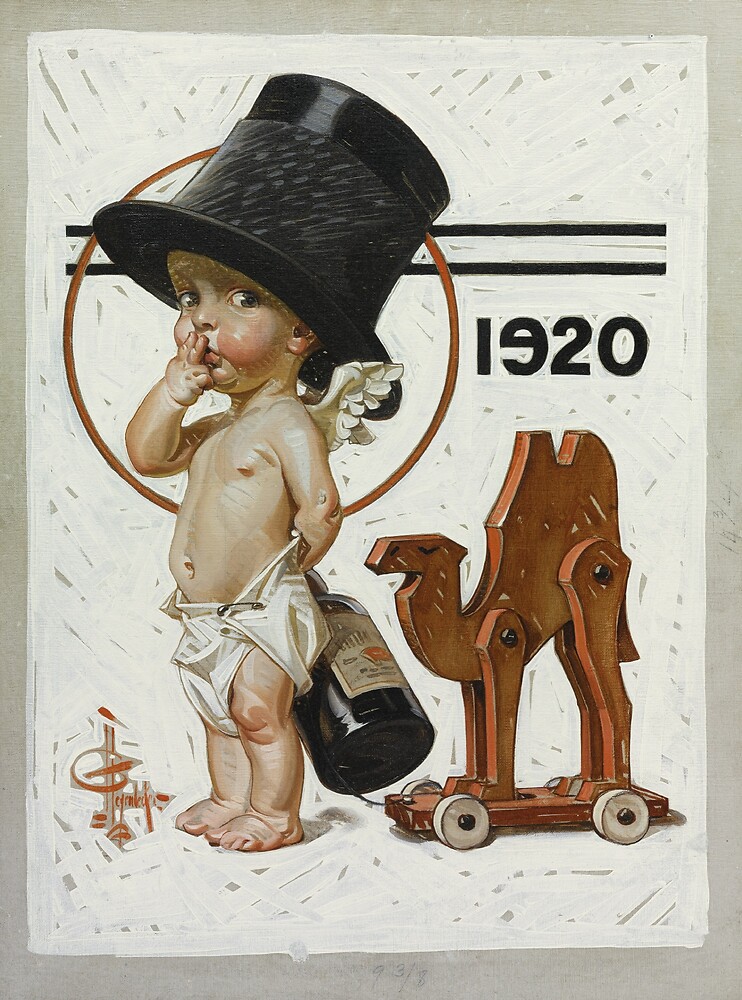

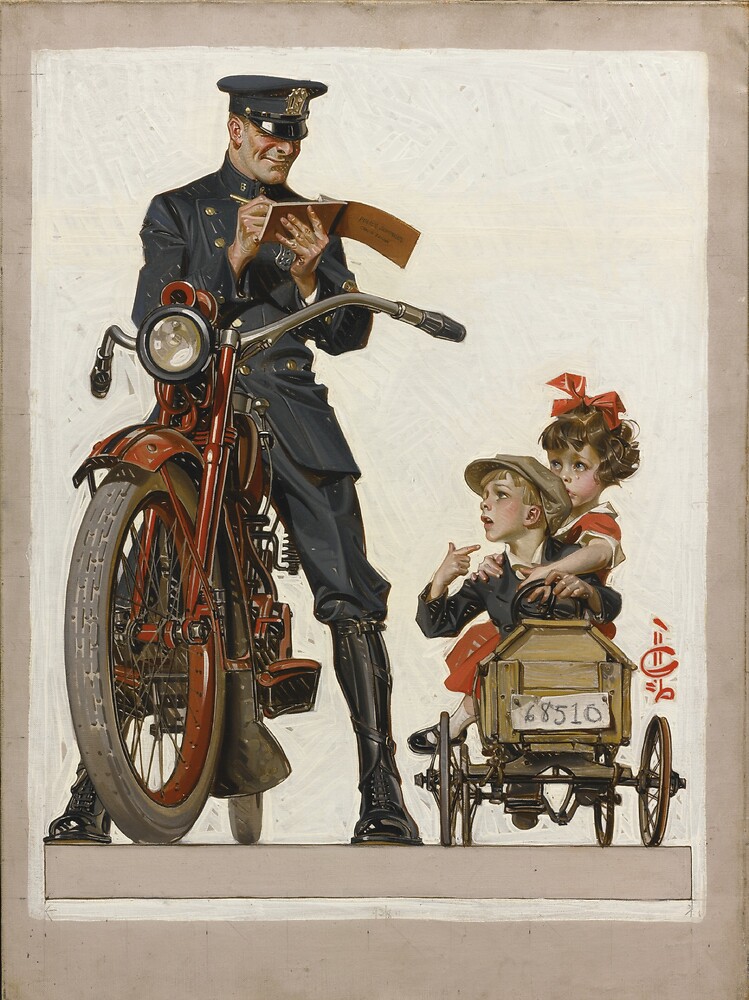

Such shapes are also visible for painters who relied on strongly delineated brushstrokes, such as Leyendecker, Sargent or Zorn.

New Year’s Baby, The Saturday Evening Post, January 3, 1920, oil on canvas

by

Joseph Christian Leyendecker

Traffic Stop; The Saturday Evening Post, June 24, 1922, oil on canvas

by

Joseph Christian Leyendecker

Mrs Henry White, 1883, huile sur canvas

by

John Singer Sargent

Portrait of Lady Agnew of Lochnaw, 1892, huile sur canvas

by

John Singer Sargent

Midsummer dance, 1897, oil on canvas

by

Anders Zorn

William Howard Taft (27th U.S. president), 1911, oil on canvas

by

Anders Zorn

Marco Bucci emphasizes here the importance of shapes from a design, readability point of view:

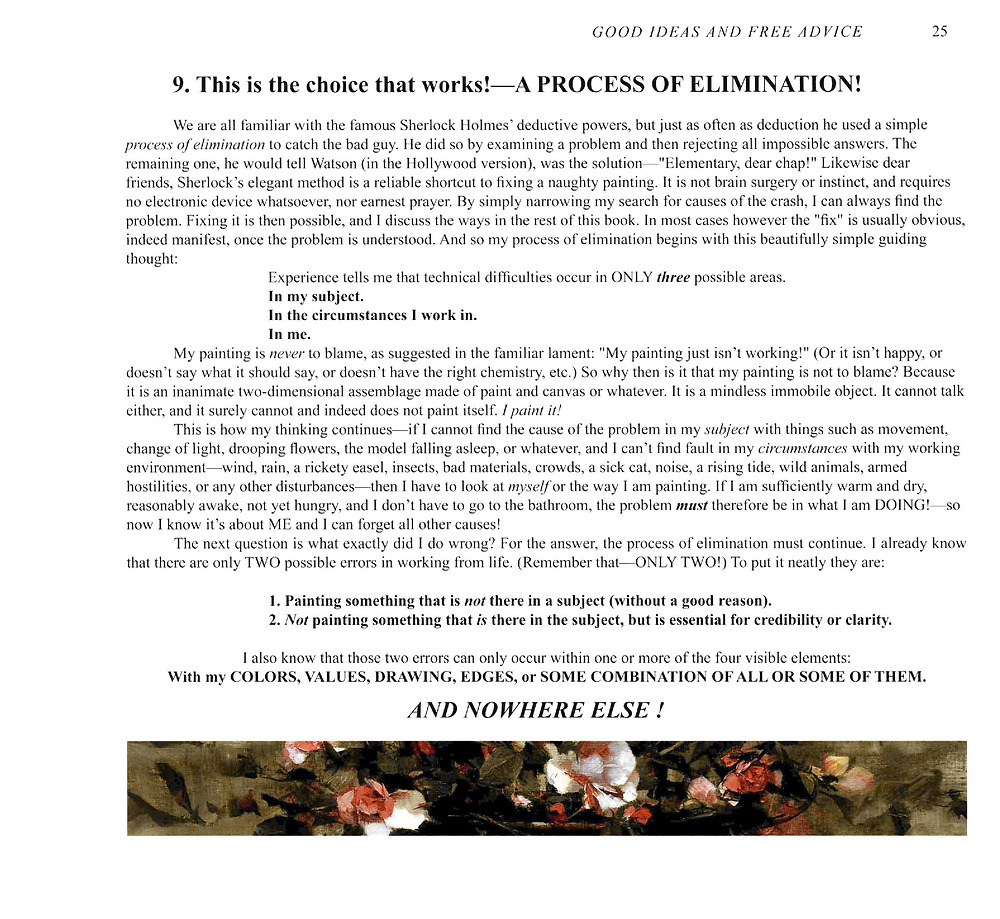

Here’s how Richard Schmid uses deductive reasoning to develop a process painting, to identify and to fix errors using a similar shape-based system:

Page 25 of Alla Prima II: Everything I know about painting

by

Richard Schmid

Joshua Been, aka. the Prolific Painter has perfected a similar approach:

He exposes in his book, available for free online, in the Visual Language section:

Learn to see learn to paint, page 12

by

Joshua Been

Learn to see learn to paint, page 13

by

Joshua Been

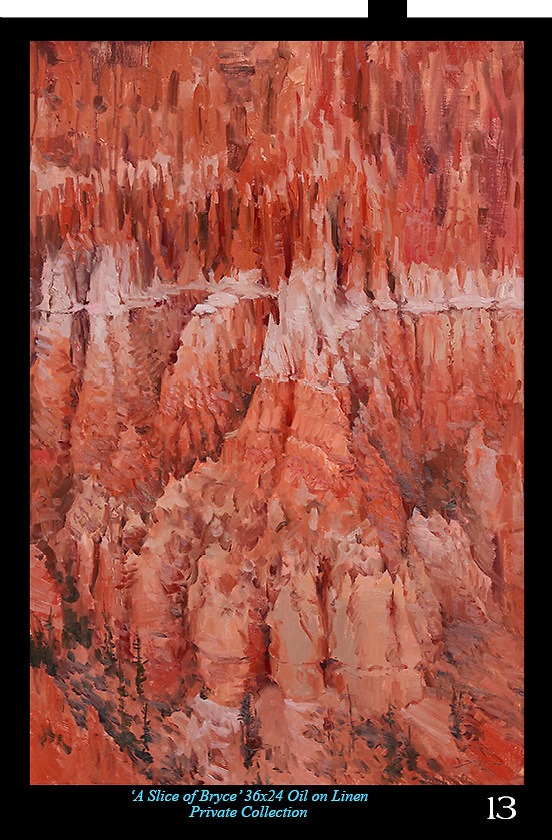

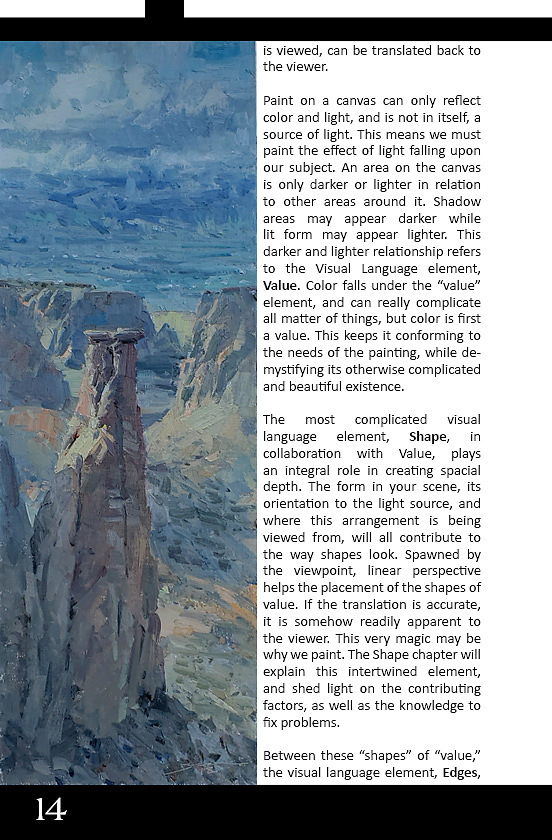

Learn to see learn to paint, page 14

by

Joshua Been

Learn to see learn to paint, page 15

by

Joshua Been

Axioms, Turing machine

Given the versatility of modern computer usage, it may seem a bit surprising to learn that ultimately, a computer cannot do literally anything: ultimately, to simplify, it’s limited to doing arithmetic, and this is an insurmountable limitation: it’s rooted in the mathematical foundation of computer science.

A great deal of mathematics has been axiomatized: in essence, the idea is that a subdomain of mathematics such as geometry, can be characterized by a small set of simple rules, that one can combine to derive all kinds of more sophisticated results, like Thales’ theorem, Pythagora’s theorem, etc.

We can think of such rules as small lego pieces that can be combined over and over to represent a rather pixelated version of a subset of reality.

Lego Castle set 70401: Gold getaway

by

Ɱ

As far as computers are concerned, their behavior is, as previously mentioned, ultimately limited by a set of rules, a kind of restricted axiomatic system allowing to express what are called computable functions. There are many such systems, some of them being equivalents: grossly speaking, some models can express the same concepts, only in different ways.

Like a set of blue Lego pieces and the same set with red pieces both have the same expressivity potential, only the color of the constructions will change.

One of the most common models is the Turing machine. It mostly consists of:

- a (theoretically) infinite tape containing cells where one can read/write symbols;

- a finite sets of instruction such as: if we read a “0” while being in the state “reading a number”, move the head one cell to the right, and stay in the state “reading a number”.

A key aspect to understand is that computer scientists use lots of conventions, layer upon layers of arbitrary rules, to increase the usability of such abstract tools for humans.

For instance, the characters you’re currently reading don’t look like numbers to you, but to your computer they are: nearly everything your computer handle ultimately is encoded as numbers, most often in base two: zeroes and ones. Each of those video is one gargantuan number: programmers taught computers the conventions to use to make sense of this otherwise chaotic stream of digits. And those very conventions are also encoded as numbers, and programmers taught computers how to interpret those conventions out of more primitive conventions, and so forth, until we reach the most primitive conventions, which, for the sake of simplification, are close to what a Turing machine can achieve.

A rather impressive, more sophisticated version of a Lego-based Turing Machine: the RUBENS project, by students of the ENS Lyon, France:

Some more theoretical explanations, for those who prefer a less written approach:

Comments

By email, at mathieu.bivert chez: